Conditionally debounce value updates, in Swift

Improve your iOS app's performance and enhance your users' experience by learning how to conditionally debounce events. Also, it's a panacea that solves everything. Ok, almost everything. Depending on how you look at it.

Recently, I've been working on an app that uses a camera. The feature I was on involved detecting certain features (calibration markers) in real-time and making sure the users could only take a picture if and when OpenCV detected those features. Meaning that the camera shutter button would only be enabled if and when OpenCV had detected the desired features.

However, while remarkably performant and more than capable of keeping up with the camera's frame rate, OpenCV would often unpredictably briefly fail to detect the desired features, meaning actually taking a picture pretty much involved racing against OpenCV. Good luck with that.

To make it easier for our users, and to make sure our these mere mortals wouldn't have to become semi-professional StarCraft players just to use our UI, I decided to give them an edge, by buffering the last valid frame for a few tenths of a second. Which meant implementing some sort of conditional debounce.

What is a debounce?

Debouncing is a technique used to optimize the performance of functions that are frequently triggered by user interactions or other events, such as scrolling, resizing, or typing.

When a debounced function is invoked, it starts a timer and waits for a specified period (debounce time) without any further calls to the function. If the function is called again within the debounce time, the timer is reset, and the waiting period starts again. The function is only executed once the debounce time has passed without any additional calls. This is particularly useful when dealing with events that generate a high number of rapid triggers, such as... text input. Or detecting feature in real-time in camera frames.

By grouping multiple successive calls into a single call and executes the function after a period of inactivity, it helps limit the number of times a function is executed.

Example: Search as you type

Let's take an example: search as you type.

We've all seen it. And when it's well done, we've all love it.

When implementing search as you type, you may want to avoid sending a request to your backend at • every • single • user • keystroke. Because that would most likely involve sending many requests, cancelling them every time the user presses a new key, and making sure your concurrent requests don't generate race conditions (after all, why shouldn't the first request return after the second one?), or slow your UI to a crawl. By the way, if someone involved with the World of Hyatt iOS app ever reads this, yes, I would be more than willing to fix both of these issues for you. Seriously, are you guys running all those network calls on the main thread?

Debouncing allows us to group these keystrokes into a single string, and wait until the user stops continuously typing away before sending our query to our backend. Where would have had multiple requests to handle and cancel, we only have one, only sent once the user has slowed down.

Debouncing, conditionally

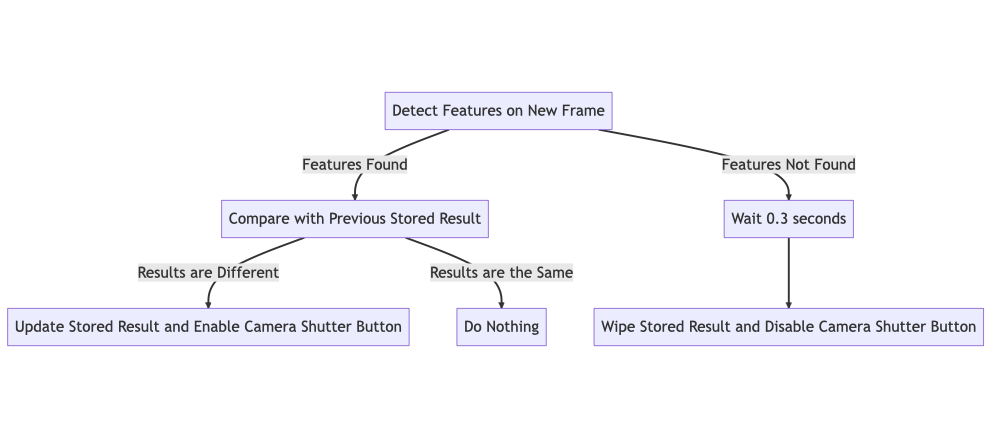

In my case, the first step is to detect the features on a new frame. When these features are successfully identified, they are compared with the previously stored result. If any differences are detected between the new and old results, the stored result is updated with the new values, and the camera shutter button is enabled. Consequently, pressing the shutter button will use these updated results. However, if the features cannot be found, the system waits for 0.3 seconds before clearing the stored result and disabling the camera shutter button.